- JPB-UG-1: Arial photography change detection (CS/AI/Maths) (Jia Ng)

- JPB-UG-2: Vision system for chess-playing robot (CS/AI/Maths) (Gregory Ives)

- JPB-UG-2b: Vision system for chess-playing robot (CS/AI/Maths) (Lawrence Burvill)

- JPB-UG-3: Hearing simulator for MIRo robot (CS/AI/Maths) (Thomas Croasdale)

- JPB-UG-4: Blink detection for web navigation (CS/AI/Maths) (Trisha Goel)

- JPB-UG-5: Face pixelation for video anonymisation (CS/AI/Maths) (Georgica Bors)

- JPB-UG-8: Red Bull PIV video analysis (Daniel Reynolds)

JPB-UG-1: Arial photography change detection (CS/AI/Maths)

Description

Unmanned aerial vehicles (UAVs) are now routinely used for aerial surveillance. These vehicles automatically collect and relay image or video data and are capable of surveying larger geographical regions. In addition to simply mapping a region, the data can be used to detect change over time. There are many applications. For example, ecologists might be interested in monitoring the change in land use over time, or intelligence services might be interested in detecting the sudden appearance of new millatory installations or the movement of millitary assets.

The automatic change detection task, which would previously been performed by humans, can now be performed using standard computer vision techniques. Although in essence it is quite simple – compare to images and mark regions that are different – in practice is is complicated by the fact that not all change is relevant. For example, the system needs to ignore changes due to difference in lighting, shadows, image alignment, etc.

This project will use the OpenCV computer vision toolkit to build and experiment with a change detection system. It will be evaluated using the Aerial Image Change Detection (AICD) dataset – an existing dataset of 1000 image pairs for which the regions of change have been precisely labelled. For an example image pair see the AICD website.

Prerequisites

- Experience in Python programming.

- An interest in data-driven computing / machine learning.

Further reading

- AICD website - the change detection dataset.

- OpenCV Python tutorial

- A recent review of change dectection research

JPB-UG-2: Vision system for chess-playing robot (CS/AI/Maths)

JPB-UG-2b: Vision system for chess-playing robot (CS/AI/Maths)

Description

Imagine playing chess against your computer using a standard chess board. Such a system would require three components: i) a computer vision system to allow the computer to see what move the human has made ; ii) a chess-engine to work out the next move; iii) a mechanism to allow the computer to move the piece. This project will concentrate on the first component. The project will capture data from a video camera that is looking down on the board. It will then need to analyse the video input to detect when a move has been made, and to work out what piece has been moved from where to where. This sounds like a difficult vision problem, but it can be made simpler by using knowledge of the game itself (i.e., by knowing what moves are possible at each stage). The project will then use an open-source chess-engine (many are available) to generate the computer’s move. The computer could then either instruct the human to update the board (e.g. using speech synthesis, “Move my bishop to square E4”) or - in theory - could be used to control a robot arm to move the piece.

This project will require good programming skills. The project may make use of the OpenCV computer vision library.

Requirements

- Experience in Python programming.

Reading

- Wikipedia (Chess engines) - Useful information about chess engines.

- OpenCV Python tutorial

- Gonzales and Woods, Digital Image Processing, Addison-Wesley Pub. Co, Reading, Massachusetts, 1992. (or any other similar textbook)

JPB-UG-3: Hearing simulator for MIRo robot (CS/AI/Maths)

Description

The MIRo robot is a low cost bio-mimetic robot designed as a ‘companion robot’. The robot is based on a pet dog and has a pair of microphones mounted inside moveable ears. We have been working with an especially adapted 8-microphone version of the robot for which we will be developing machine listening algorithms.

The aim of this project is to build a MIRo hearing simulator. This simulator would be a software system that enable us to estimate the signals that the MIRo would receive when moving in a predefined manner in a predefined acoustic scene. The software would allow us to generate simulated data for training machine listening algorithms (such as speech recognition) and save the expense of making real recordings with an actual robot.

The project will make use of measures that have already been made of the robot motor noise and microphone behaviour. Techniques for scene simulation are well-understood and would not be the main focus of the project. Rather the project would focus on the interface aspects, i.e. proving the standard algorithms with an easily usable GUI. On possibility is to make the simulator available via a client-server architecture so that users could access it as a web service.

Further reading

For further details see here,

Prerequisites

- Experience in Python programming.

- An interest in audio signal processing.

JPB-UG-4: Blink detection for web navigation (CS/AI/Maths)

Description

This project will develop and test blink detection as a means to control a standard web-browser. The goal will be to evaluate this as a technology for disabled users who are unable to use a conventional keyboard or touch-driven interface.

The project builds on a project that ran this year which built a chrome web browser extension that allow the browser to be operated using a brain-computer interface. This system works but the BCI technology is hard to use for many users. The new project will build a video-based blink detection system that can interface with this modified web-browser to allow an alternative control mechanism that many users will find easier to operated, and which avoids the intrusion of having to wear a headset.

There has been a lot of recent work on automatic blink detection (e.g., it is used to monitor driver alertness in some cars). The techniques are well understood and can be easily implemented in recent computer vision and machine learning toolkits. However, challenges remain in working out how best to integrate the technology with a web-browser, and how to produce a system that won’t be prone to false positive (e.g., distinguishing deliberate blinks from blinks that occur spontaneously).

It is expected that the student will use high-level tools such as the OpenCV computer vision library and the face tracking components of the dlib Python machine learning library.

Prerequisites

- Experience in Python programming.

Further reading

- OpenCV Python tutorial

- dlib - A toolkit for making real world machine learning applications.

- An example blink detection research paper.

JPB-UG-5: Face pixelation for video anonymisation (CS/AI/Maths)

Description

It is often necessary to process images and video in order to anonymise people prior to broadcast or publication. This is typically done by blurring or pixelating faces. Previously this would have been performed using manual tools which require a human operator to place a bounding-box around each face in the image (or in each frame of video). Increasingly, this task is performed semi-automatically or fully automatically using face detection and tracking techniques.

This project will build and evaluate an automatic face pixelation tool designed to operate on video data. It will be able to make use of face detection and tracking tools in the OpenCV computer vision and dlib machine learning toolkits. There are many suitable annotated datasets available for evaluation, typically using video that can be downloaded for YouTube (e.g., see the following paper)

Prerequisites

- Experience in Python programming.

Further reading

- OpenCV Python tutorial

- dlib - A toolkit for making real world machine learning applications.

- Face detection and tracking paper including details of available datasets.

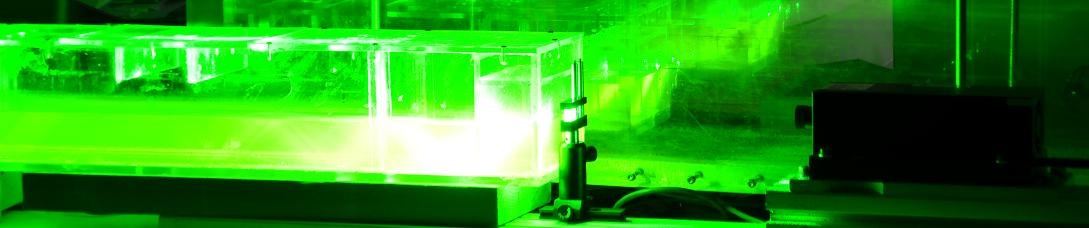

JPB-UG-8: Red Bull PIV video analysis

Description

Student proposed project.

After spending a year on placement at Red Bull Technology, I have seen how the business likes to automate various steps in the workflows they use.

Because it is quite costly (performance-wise) to process images when performing comparisons and differentials, engineers have to analyse each image individually and remove saturated areas that they deem to hold no valid data. This reduces the amount of computation that is required to occur.

They have proposed a project where these images get processed automatically. This would involve analysing what makes a particular area saturated and to ultimately determine whether or not this area is useful for the next stage of the process.

This project could involve 2 stages:

-

Looking more scientifically at what makes a good processed image to determine how to automatically process the image and produce human-like results.

-

Use Machine Learning and pre-existing data to create a system that can be trained to build more accurate processed images.

These could also involve research into performance and creating algorithms that have smoother edges. There would be quite a large scope for evaluation with his project. The data will be provided by Red Bull Technology meaning that there would be rules with regards to the publication of the work.

[TOP]